I am

Aaron Wong

My name is Aaron Wong and I am currently an undergraduate student attending the University of California San Diego majoring in cognitive science and minoring in computer science. I want this portfolio to be a little bit more than just a place to showcase my work, I want it to be a place where I can express my personality through my own interests.

This portfolio is still under construction

schoolSchool: University of California San Diego gradeExpected graduation: Winter 2019 My Resume

LinkedIn: https://www.linkedin.com/in/aaron-wong-795878170/ GitHub: https://github.com/FlamingC4 Email: aar34w23@gmail.com

A couple of projects I have worked on in my career.

The Beginnings:

and so, it starts...

Synopsis:

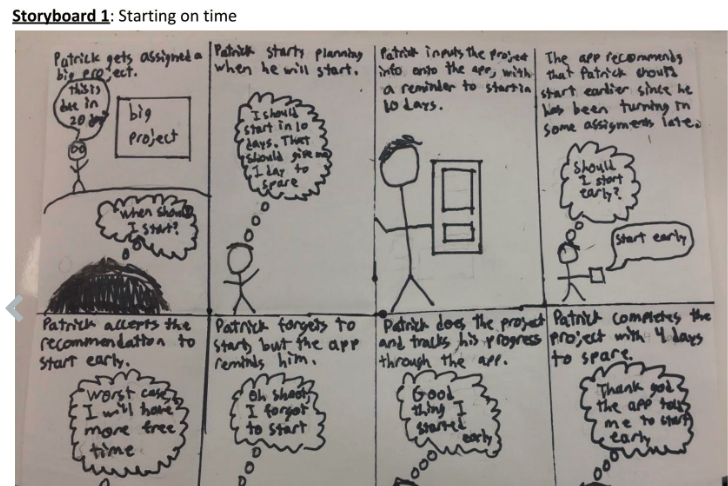

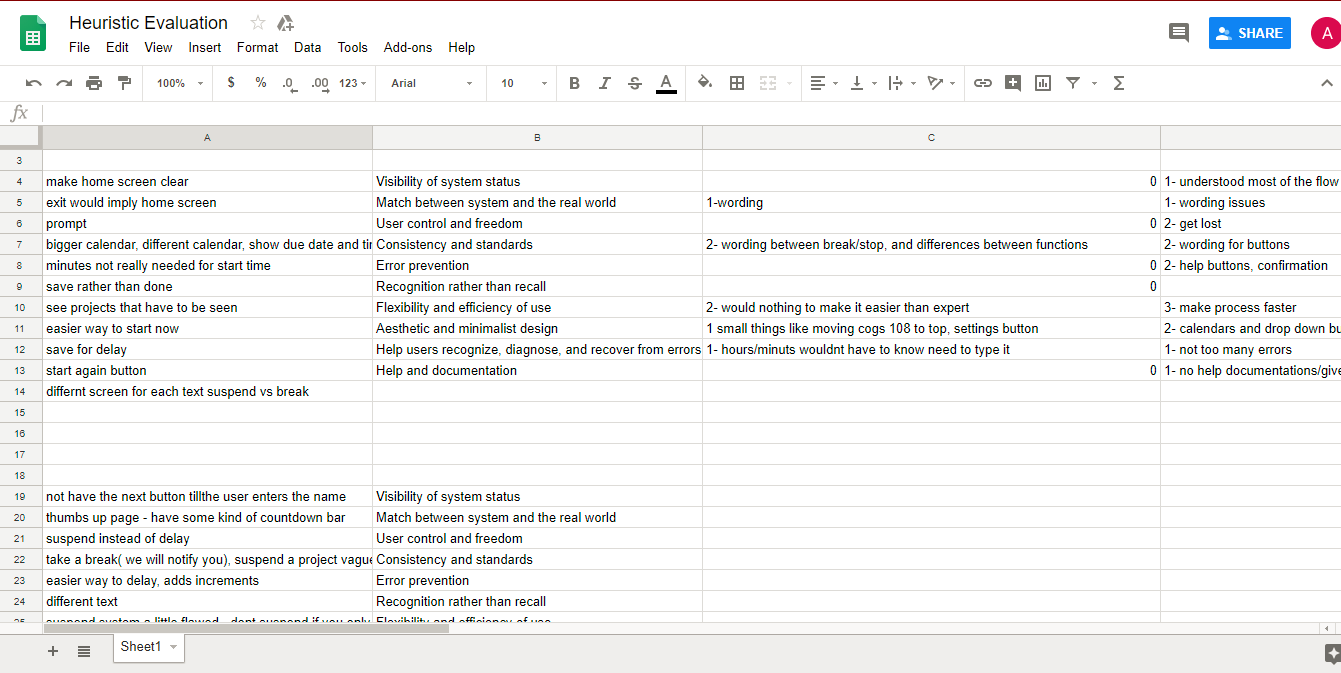

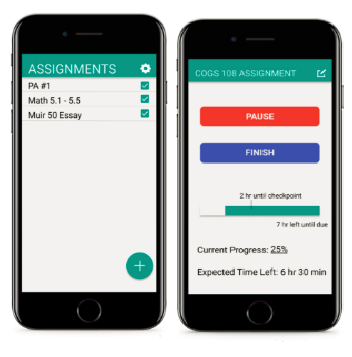

Do It Early is an app that helps you keep on track through user interaction. With this app, users can also set a start time for the assignment, and delay it if necessary. Throughout the project, we received feedback through our peers, which helped us iterate on the idea. Here, I will go over the design process and the thinking behind our idea.

Of course, there is also the technical side...

We used a "fake" database to store our data

All of the information about the projects are stored in a JSON file, meaning that our database is avaliable only when we run the application. This database is mainly routed to the enter-details page, as user input will be recorded here, such as when the assignment is due.

Node for the backend

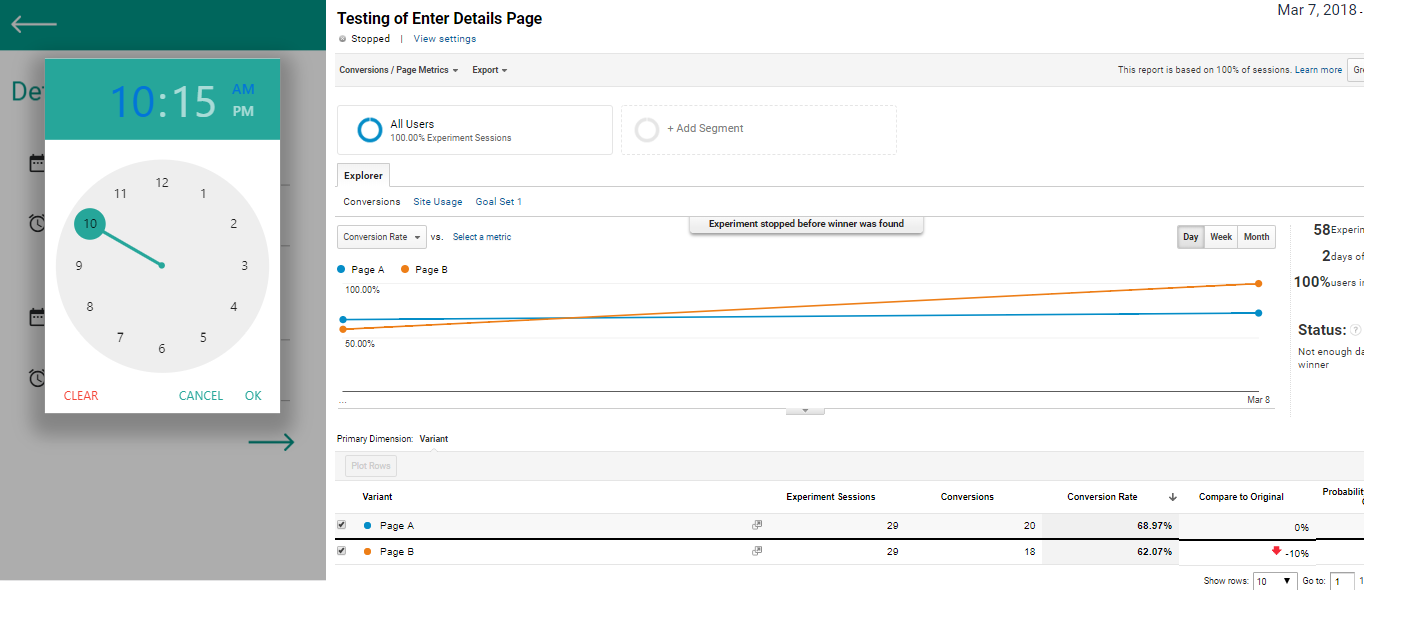

Node was used to route through pages while using data from the JSON file. Node also allowed us to produce two different versions of our app and render pages differently depending on the version. This was used in Google Analytics where we compared two versions of the app.

Don't build from scratch

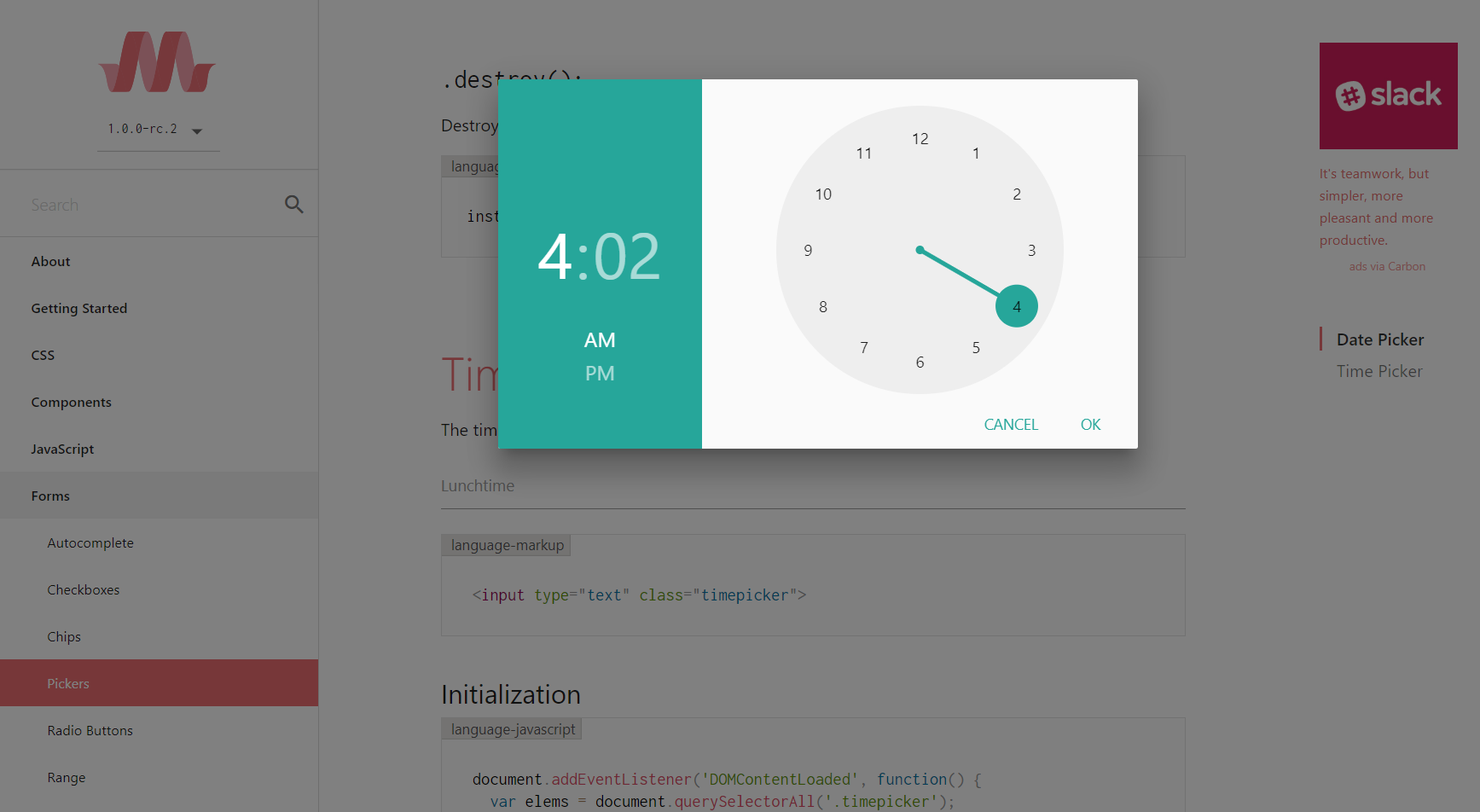

We found that using resources given were much more efficient than trying to code something by scratch. A useful tool we found was the materialize api. We liked the clock feature which was more efficient than having users manually inputting the time.

Sound Design

In order to create sounds that correspond to different alarms, we used Ableton to edit stock sounds. For example, for our "start working now" sound, a couple of equalizers were added to the sound in order to boost the bass to the point where the sound would clip and be uncomfortable to listen to. Here are a few sounds:

Original alarm sound cropped and slightly bass boosted

Original alarm sound filtered

Strong alarm heavily bass boosted (warning: turn down volume)

Enter checkpoint sound

The Take Away

DoItEarly was an interesting project: not only were we tasked with coding, but also the design. I learned how to apply the design process as well as critique other app prototypes. I was amazed at how every aspect mattered from intuitive user flow to correct wording on buttons. In terms of coding, I learned basic backend concepts using Node-Js as well as refining my web development skills with html, css, and javascript. DoItEarly isn't fully funcitonal yet as it is only a prototype. The prototype itself could also be improved upon, such as making the front end look nicer with visuals. However, the development of DoItEarly has been quite a learning experience thanks to my wonderful teammates.

Synopsis:

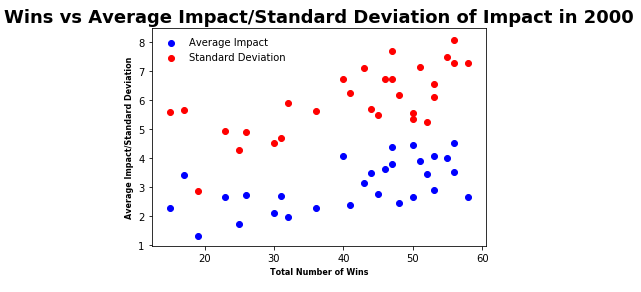

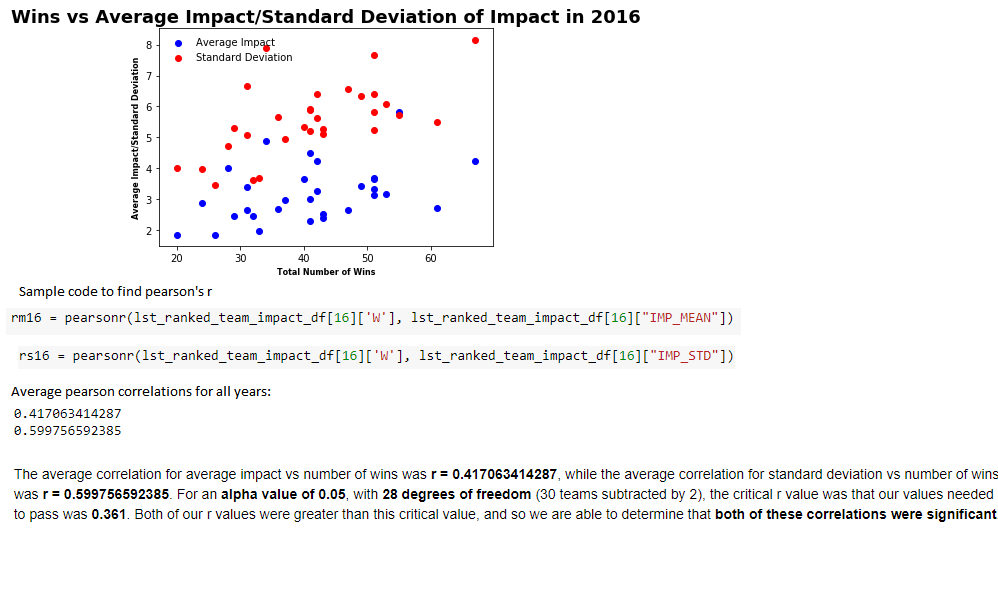

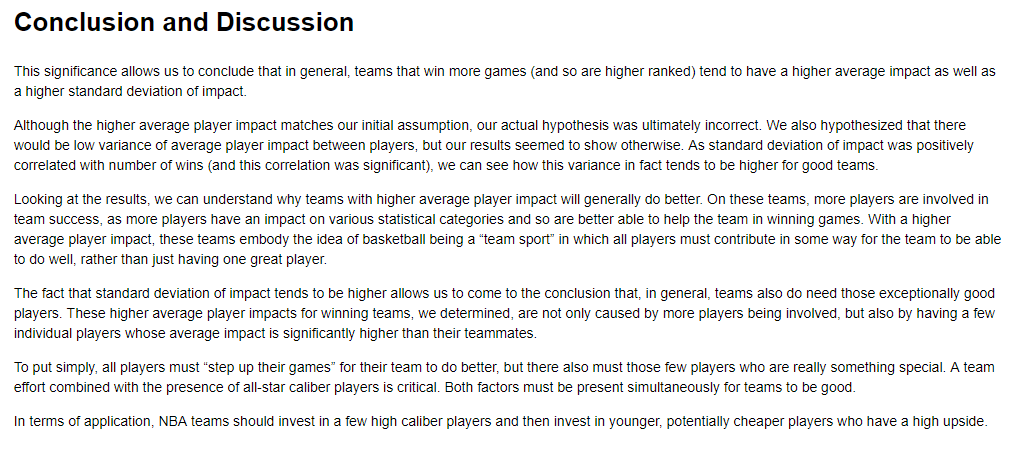

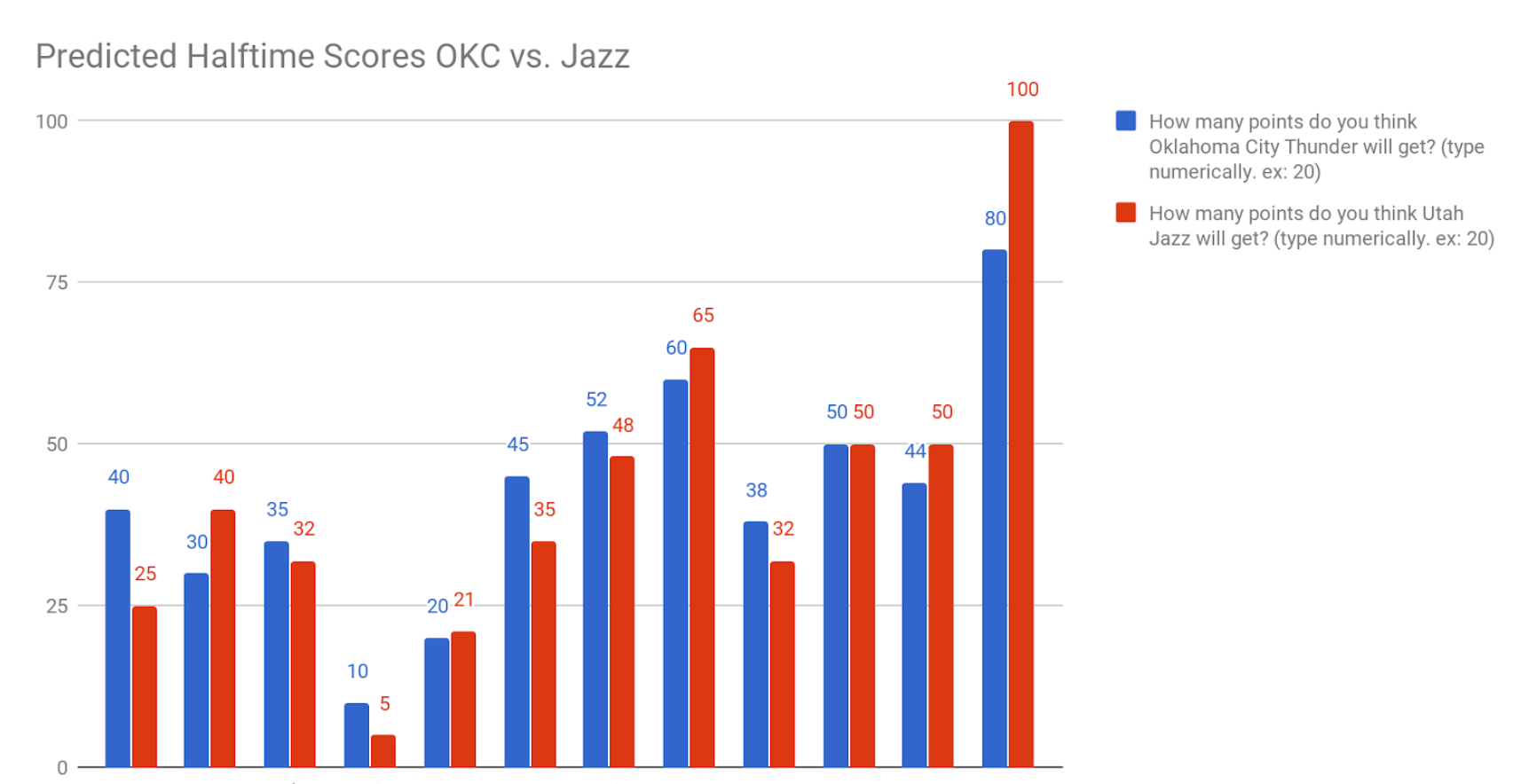

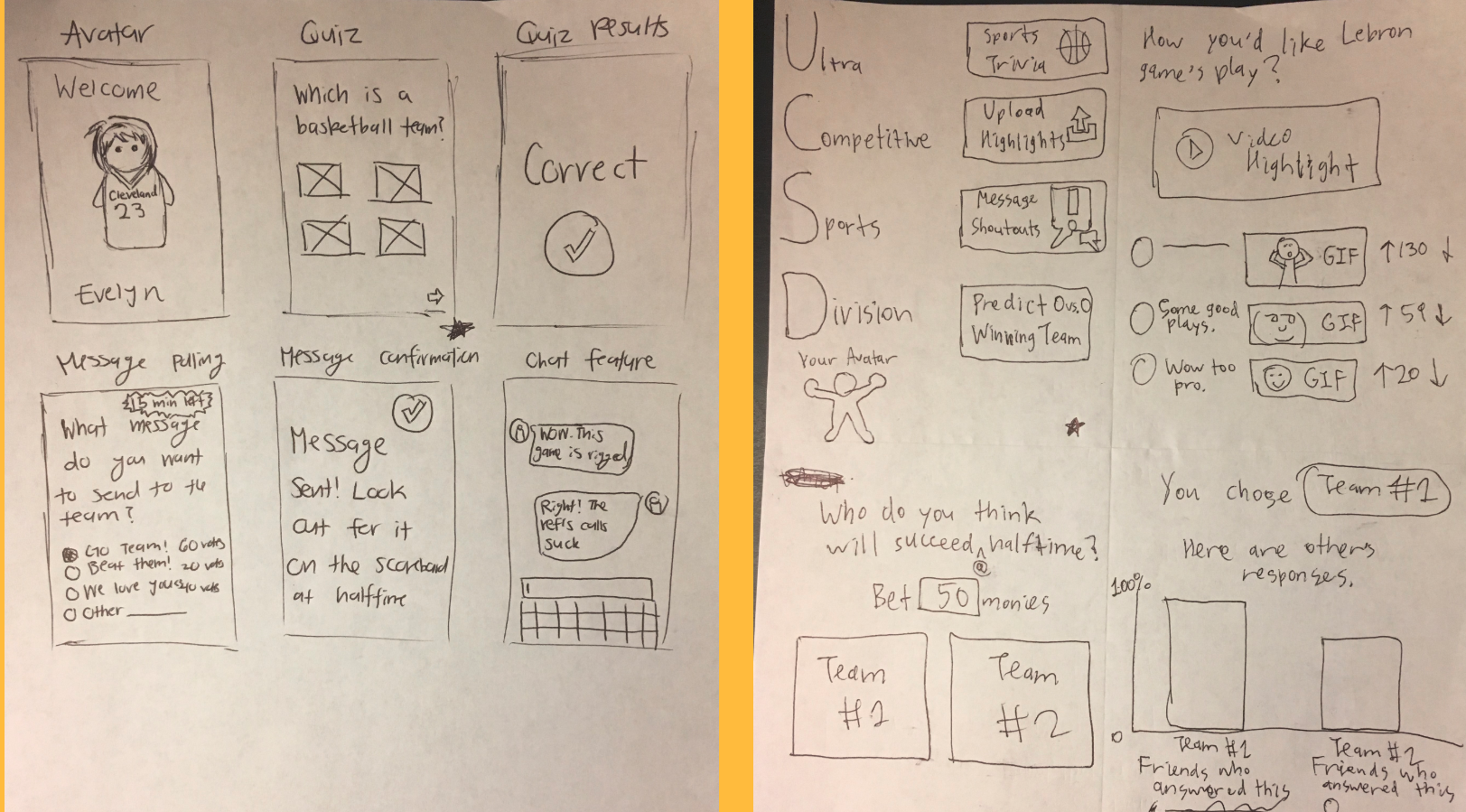

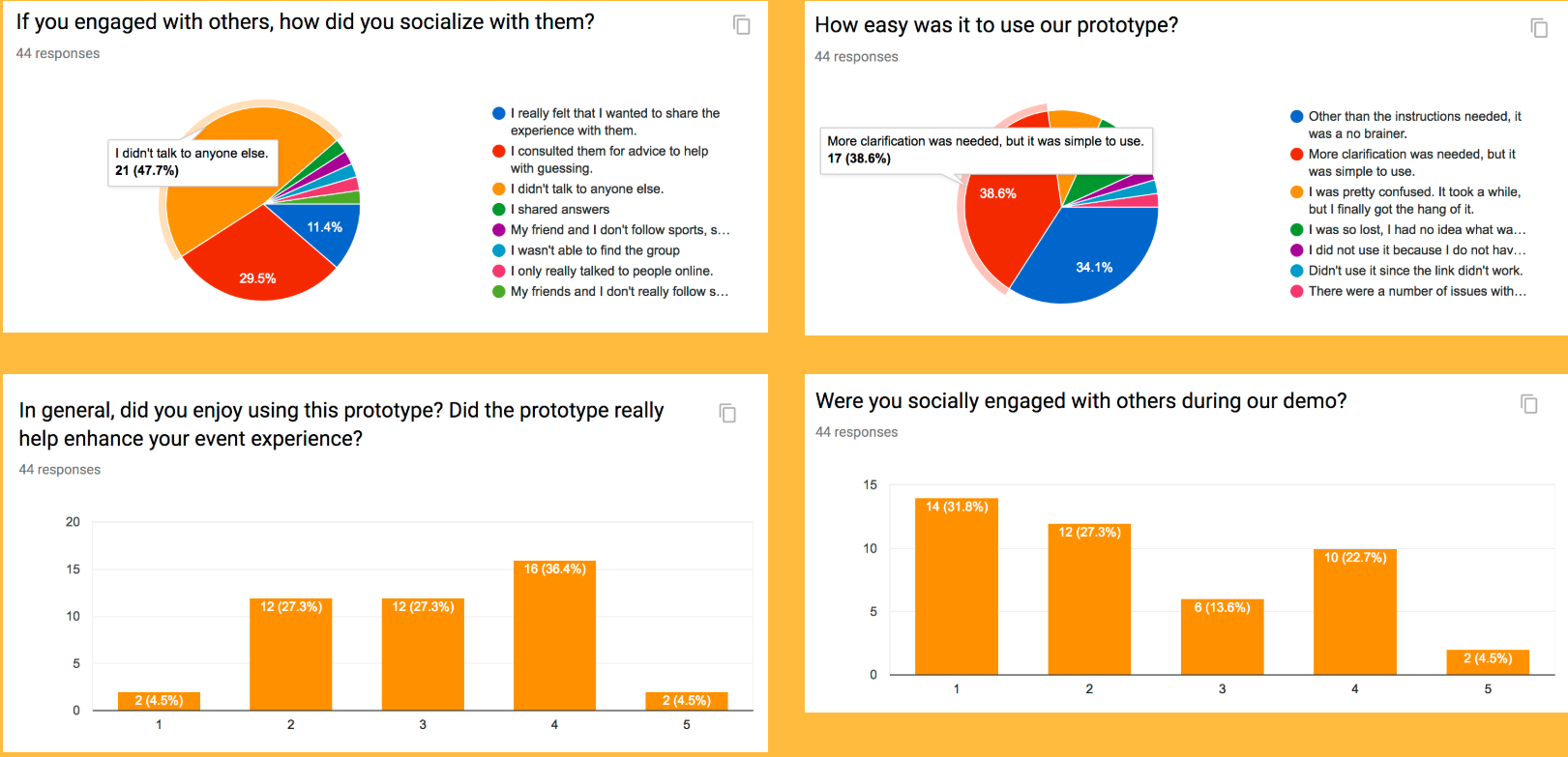

Disclaimer: I understand that this project may be hard to follow without the project if you have limited knowledge about basketball. I will do my best to explain this project to such audiences, but essentially, we are trying to find out if teams do better with one extremely good player as opposed to a handful of players who are above average.

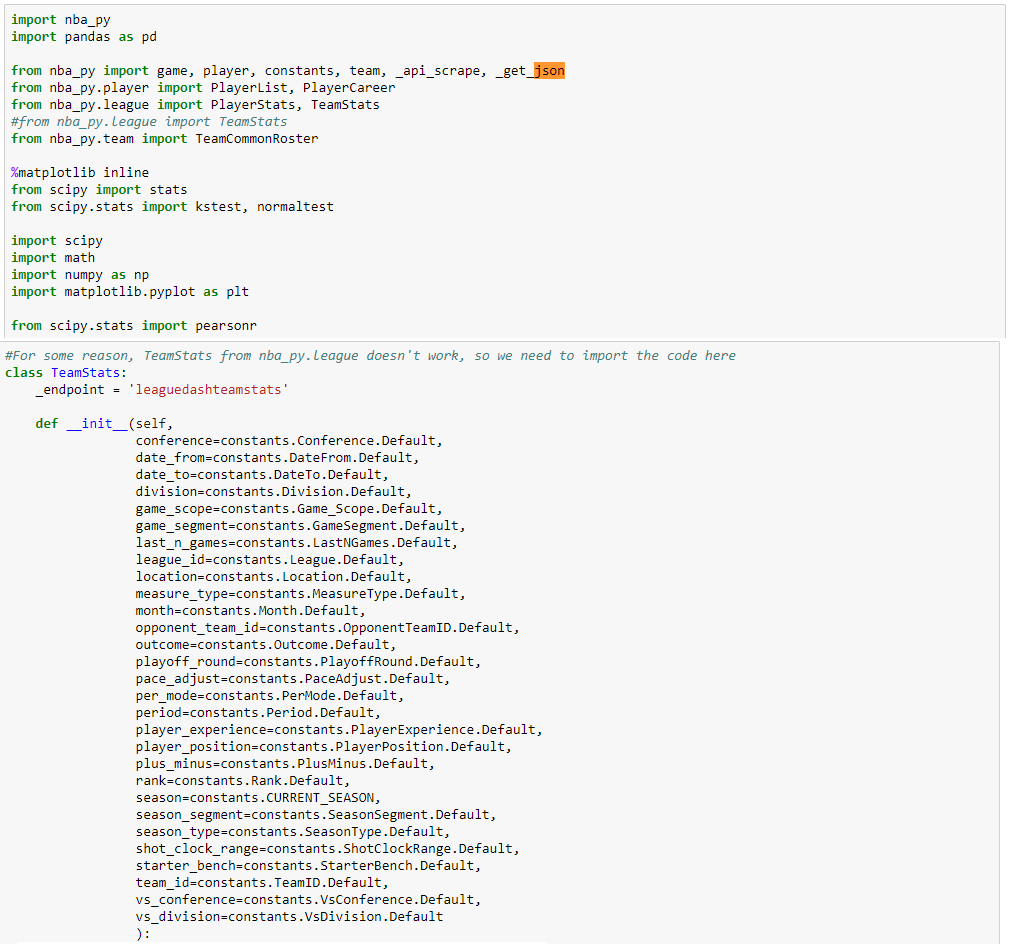

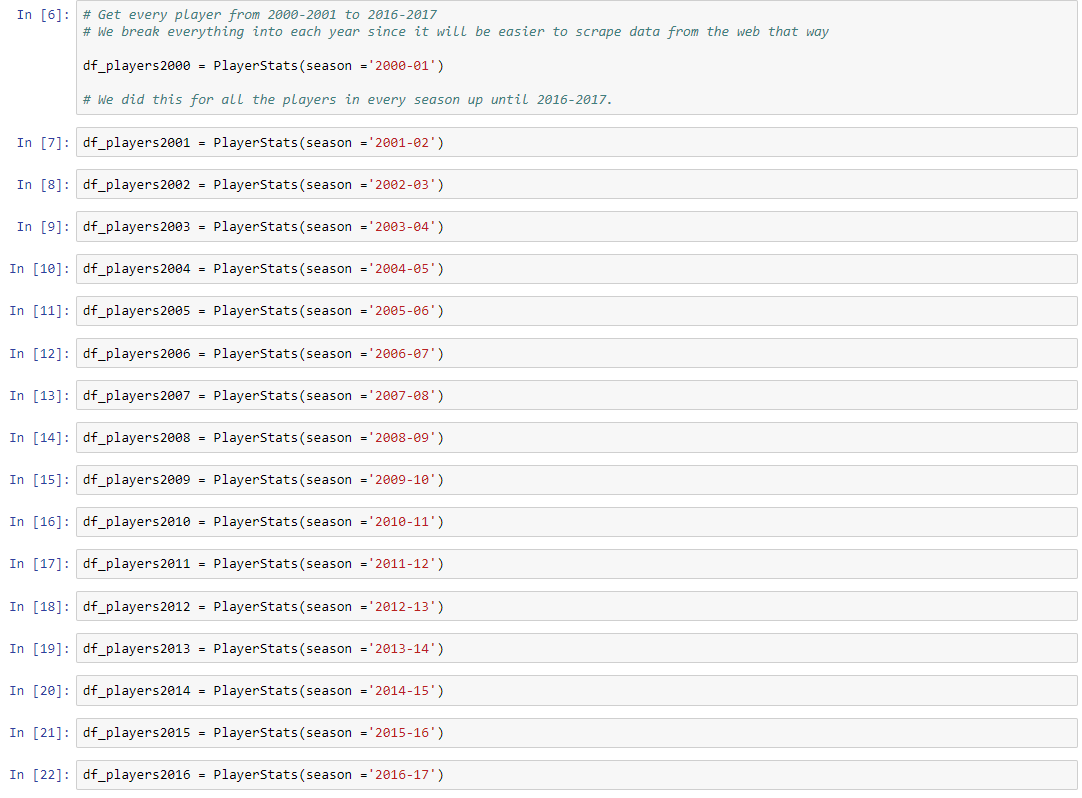

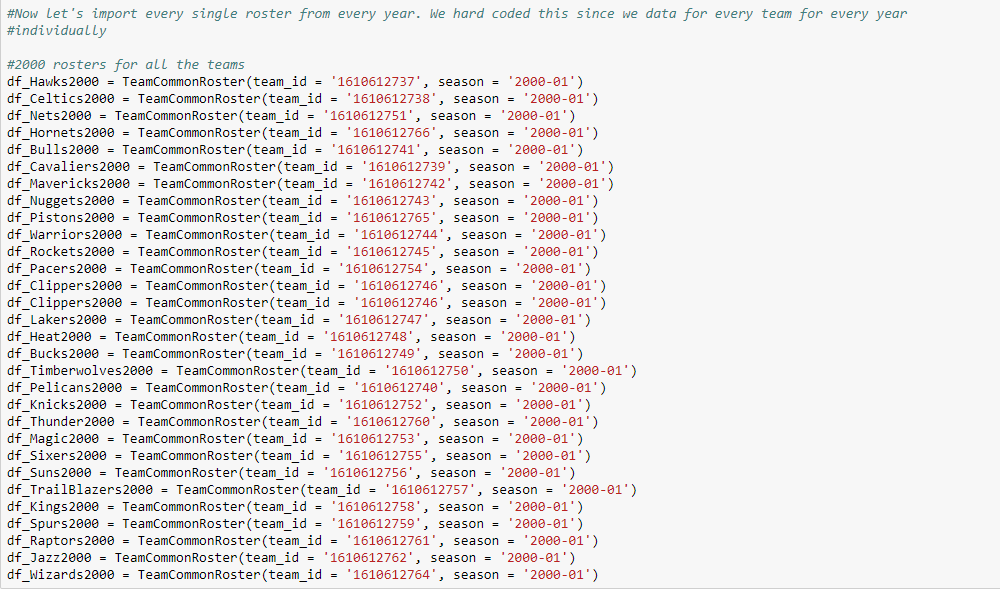

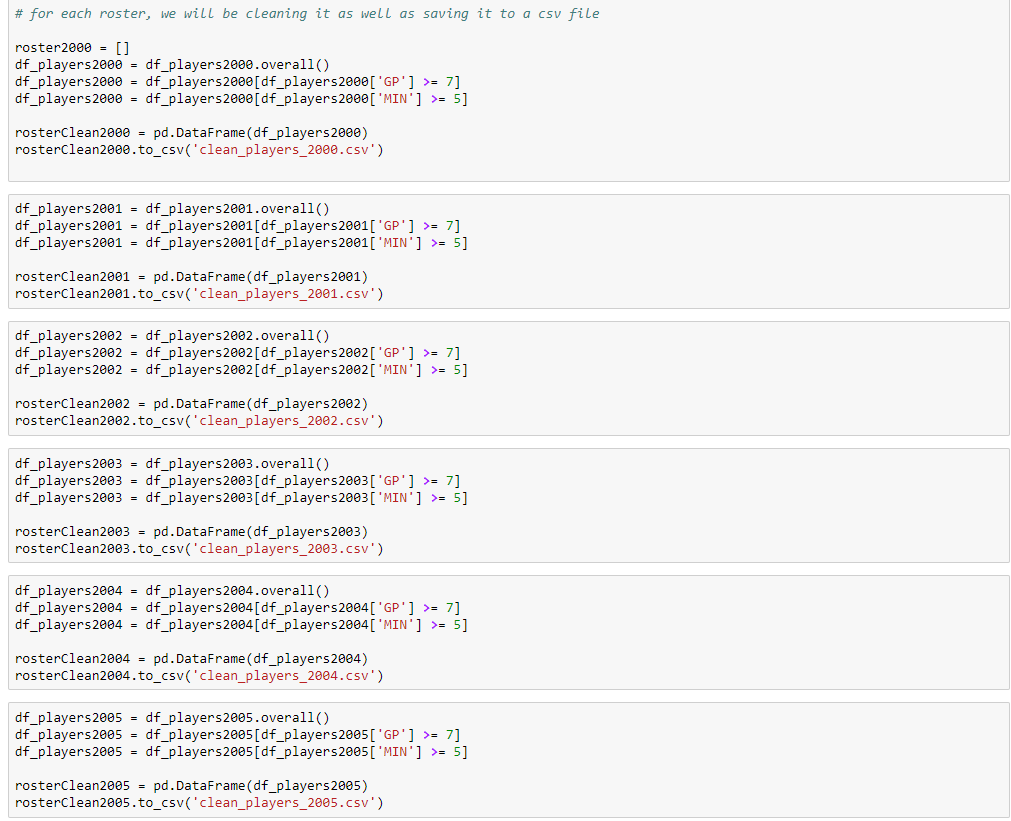

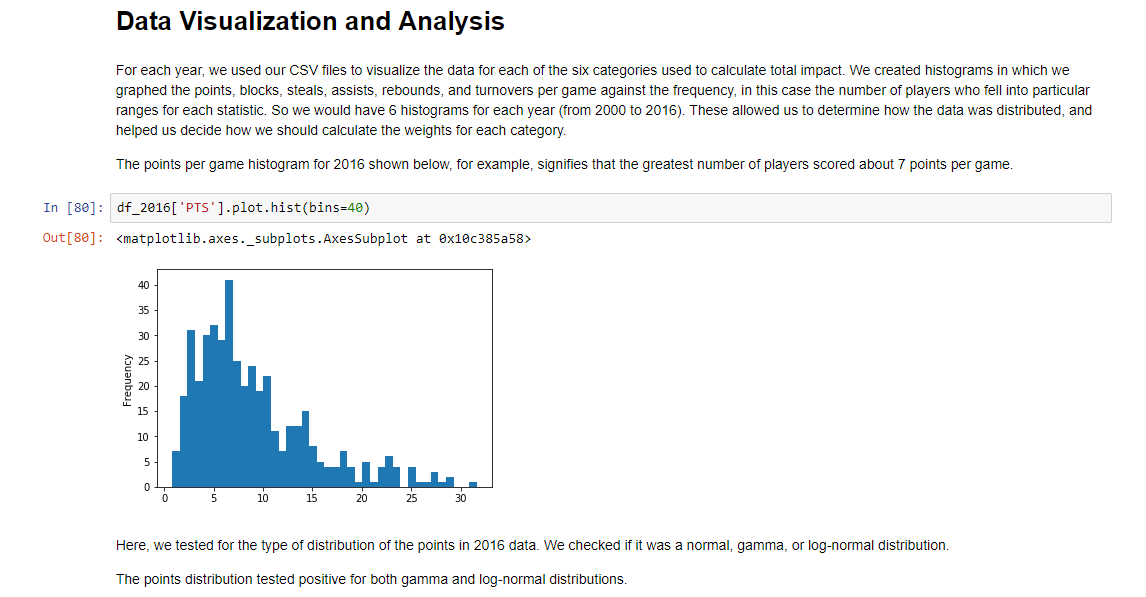

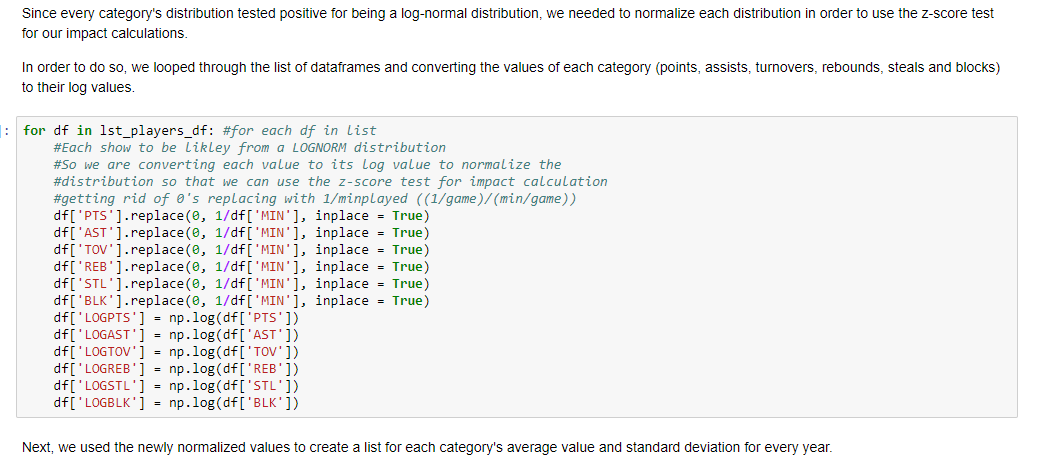

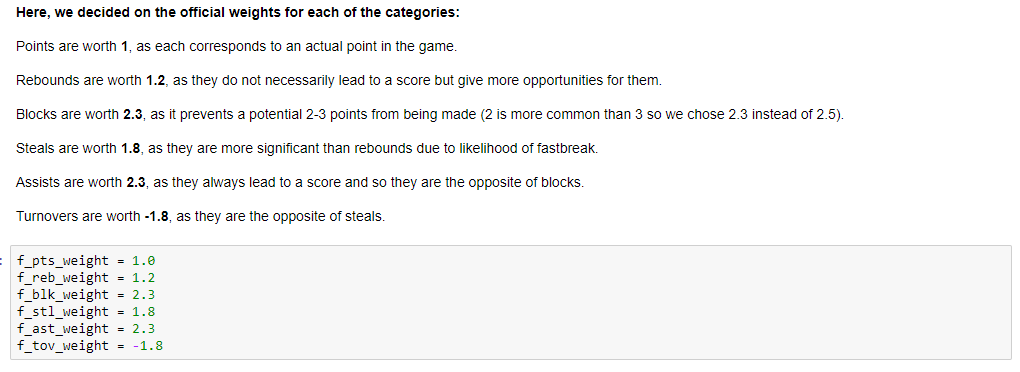

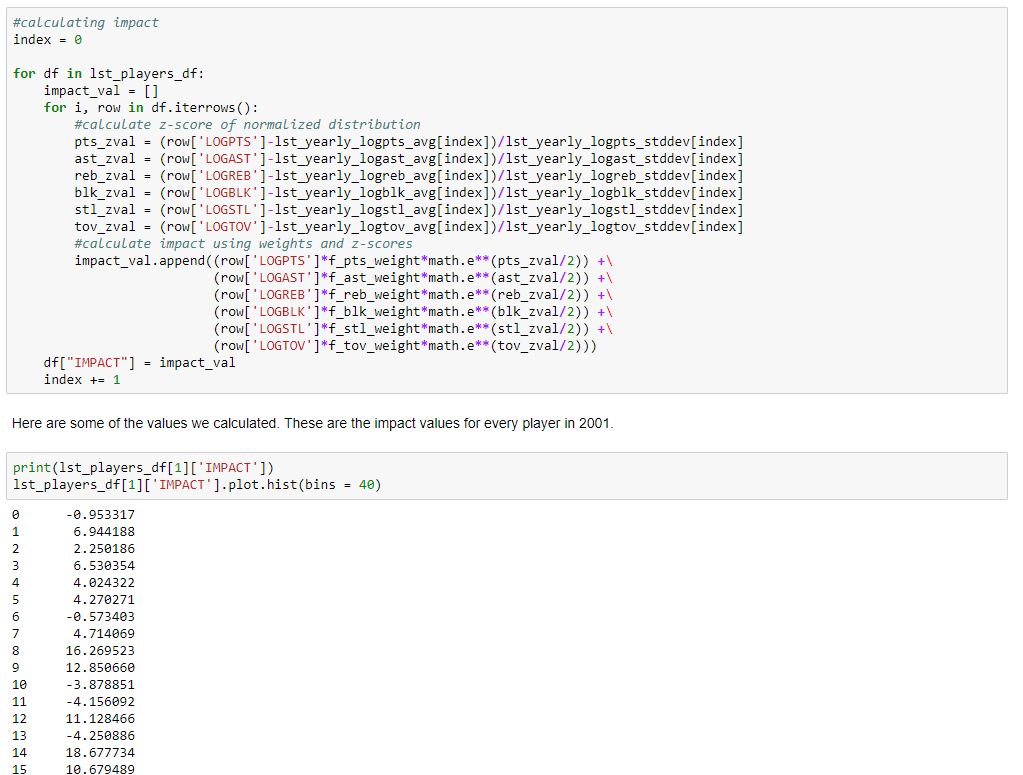

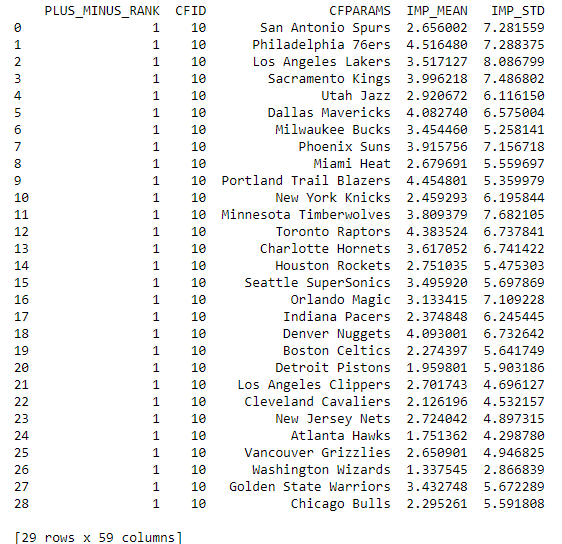

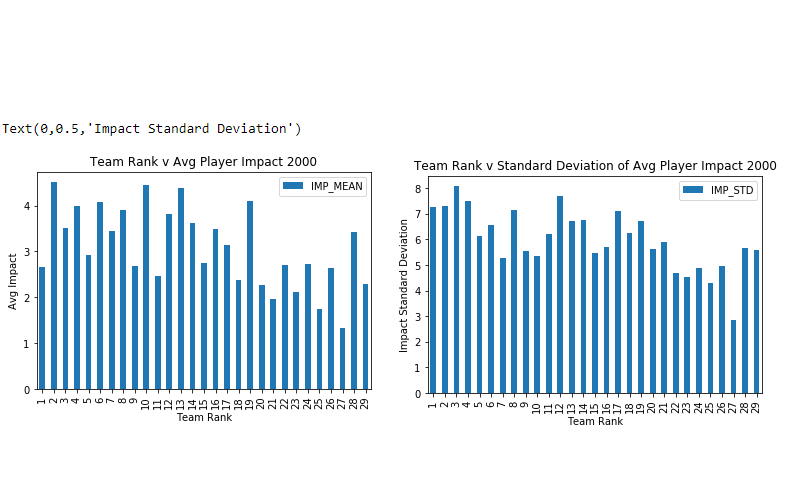

As avid NBA fans, we are interested in not just the gameplay of basketball, but the statistics behind it. It is said that good teams have at least one superstar on them, someone contributes stats that are higher than most other players. However, there are so many other factors that lead to a team's success, including having a good defense, a good offense, good coaching, good role players, etc. Teams tend to have different strengths and weaknesses, with a combination many factors determining if they do well or not. Although there are many statistics present, we want to see if we can determine what statistical trends (if any) that generally result in success in the NBA.(Note: we will be testing data on all teams from the 2000-2001 season to the 2016-2017 season)

This project can be viewed by cloning the repo and opening it on Jupyter. In the case that you don't have Jupyter installed or if you want to save sometime, here is the rundown in the from of a slideshow:

The Take Away

Saying that this data science project was a good learning experience would be an understatement. Prior to this, I have never seen anything like this before, not in any class, nor on anyone else's portfolio. Coming back to this project, it took a while for me to relearn all the science. We answered a very simple question, yet the research behind it was enormous; it made me realize how we sometimes take information for granted: it may take years just to answer a very simple question. If I were to recreate this entire project solo, it would take many months to fill in the roles of my teammates.